The next data center is in orbit

Why Google is going to space to solve AI's biggest physical constraint: Energy.

If you spend enough time building software, you eventually realize every problem is a physics problem.

We like to live in our world of abstractions—containers, services, APIs, and models. But beneath it all, there’s always a physical constraint. Your database isn’t slow because of “the cloud”; it’s slow because a spinning piece of metal has to find a specific magnetic sector, or because packets of light are hitting the hard speed limit of the universe inside a fiber optic cable.

For the last decade, the biggest problems in software have been about coordination. How do we get 10,000 servers in a distributed system to agree on a single value?

Now, a new constraint is rapidly becoming the only one that matters: energy.

Artificial Intelligence, particularly the large-scale models we’re all scrambling to build on, isn’t magic. It’s a brute-force statistical process that runs on specialized hardware. That hardware—TPUs, GPUs, whatever’s next—turns megawatts of electricity into matrix multiplications. And the demand for those multiplications is growing at a rate that is starting to look exponential.

This is no longer a software problem. It’s not even a data center problem. It’s a power generation and resource problem. Google, in its “Project Suncatcher” paper, seems to have reached the same conclusion. Their proposed solution is so audacious that it reframes the entire problem:

Don’t move the energy to the compute. Move the compute to the energy.

Working backwards from infinite energy

The Sun is the ultimate energy source in our solar system. It outputs more than 100 trillion times our species’ total electricity production. On Earth, we catch a laughably small, filtered fraction of that. A solar panel on the ground is idle half the time (at night) and spends the other half looking through a cloudy, turbulent atmosphere.

Place that same panel in a “dawn-dusk, sun-synchronous” low-Earth orbit (LEO), however, and the physics changes. The satellite circles the Earth, but it’s near-constant, unfiltered sunlight, perpetually flying over the terminator (the line between night and day). In this orbit, a solar panel is exposed to near-constant, unfiltered sunlight, making it up to eight times more productive than its terrestrial twin. It also all but eliminates the need for heavy, expensive batteries.

The old sci-fi dream was to build massive solar arrays in space and beam the power back to Earth. This is a monumentally hard transmission problem.

Project Suncatcher proposes a simpler, more radical idea: just build the data center right there.

The proposal isn’t for some monolithic, Death Star-style space station that requires robotic assembly. The architecture is pure distributed systems thinking: a “constellation” of many smaller, solar-powered satellites. Each satellite is a node in the cluster, carrying Google’s own TPU accelerator chips.

This is a data center, just one where the nodes are held together by orbital mechanics and the network rack is the vacuum of space.

Solving the four foundational challenges

This sounds like a moonshot, and it is. Google’s own blog post frames it as one, in the tradition of its quantum computing or self-driving car (Waymo) projects.

But the paper isn’t just a “what if.” It’s a systems-level feasibility study. The authors identify the four hardest, show-stopping problems and methodically prove that none of them require violating the laws of physics. They are “just” engineering problems.

1. The data center-scale communication challenge

This is the big one. A modern ML training cluster isn’t just a pile of chips; it’s a deeply interconnected network. The optical “Inter-Chip Interconnect” (ICI) in a Google TPU pod moves hundreds of gigabits per second per chip.

Your typical inter-satellite link (ISL) is not built for this. They are designed to move data from one satellite to one other, miles apart, at rates of 1 to 100 total gigabits per second. That’s a rounding error for an AI workload.

The solution isn’t a magical new laser. It’s a trade-off, rooted in physics.

The power you receive from a transmitter scales with the inverse square of the distance. Double the distance, and you get one-quarter of the power. This is the enemy of all wireless communication.

Google’s plan is to turn this enemy into an ally. To get the thousands-of-times-higher power levels needed for terrestrial data center tech, they just dramatically shrink the distance. Instead of flying 1,000 km apart, Project Suncatcher satellites will fly in a tight formation, kilometers or less apart.

At this “close” range, there’s enough power to use high-bandwidth COTS (Commercial-Off-The-Shelf) tech like Dense Wavelength Division Multiplexing (DWDM), which crams dozens of signals into a single fiber (or in this case, a single laser). Get even closer—say, under 10 km—and you can use spatial multiplexing, which is like having an array of parallel laser-beams.

This is a beautiful architectural trade-off. We accept a massive new problem (flying in formation) to solve an impossible one (high-bandwidth networking). Google’s bench-scale test already hit 1.6 Tbps. The physics works.

2. Controlling tightly-clustered formations

Of course, this trade-off creates a new nightmare: orbital dynamics.

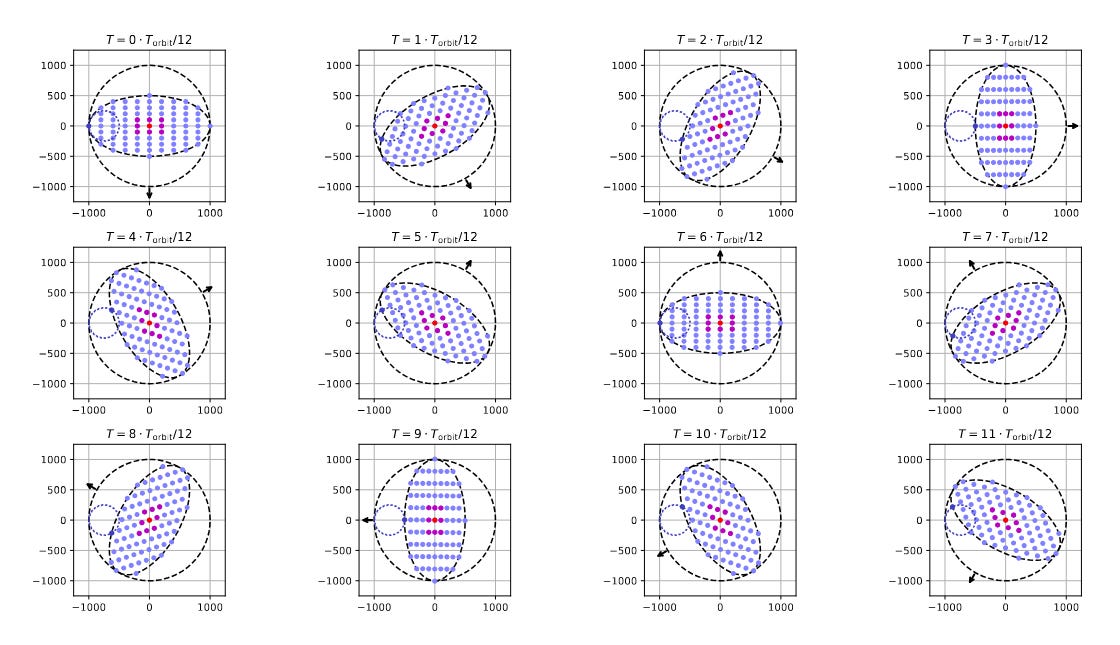

Flying one satellite is hard. Flying a constellation is complex. Flying a constellation of satellites hundreds of meters apart is a choreography problem that gives me anxiety just thinking about it.

Every tiny perturbation—the fact the Earth is a slightly squashed “oblate” sphere (the “J2-term”), not a perfect point-mass, or the faint whisper of atmospheric drag—will try to tear the formation apart.

This is where the “AI building AI” part comes in. The team modeled the cluster dynamics, starting with the classic Hill-Clohessy-Wiltshire equations and then refining them with a JAX-based differentiable model. This allows them to account for all the messy, non-Keplerian perturbations.

The result? It seems feasible. The models show that only modest “station-keeping” maneuvers (small thruster-firings) will be needed to keep the cluster stable.

3. Radiation tolerance of TPUs

Space is not a friendly place for silicon. It’s flooded with penetrating protons and galactic cosmic rays that cause two major problems: “Total Ionizing Dose” (TID), the slow, cumulative damage, and “Single Event Effects” (SEEs), where a single particle can flip a bit and corrupt data or crash a chip.

The traditional solution is “rad-hardened” chips. But you can’t buy a rad-hardened TPU. That hardware is, by definition, decades behind the cutting edge. The entire point of this project is to use modern ML accelerators.

So, Google did the next best thing: they shot their TPU with a proton beam.

They took a commercial v6e Trillium TPU and blasted it to simulate the LEO environment. The chip is surprisingly tough. The most sensitive part, the High Bandwidth Memory (HBM), only started showing “irregularities” at a dose of 2 krad(Si).

The expected five-year mission dose, with shielding, is only 750 rad(Si).

The chip is surviving by a factor of nearly three. It’s a stunning validation that COTS hardware is viable for this.

The Economic Turning Point

The first three challenges are about technical feasibility. But this last one is the most important. It’s the answer to “Why now?”

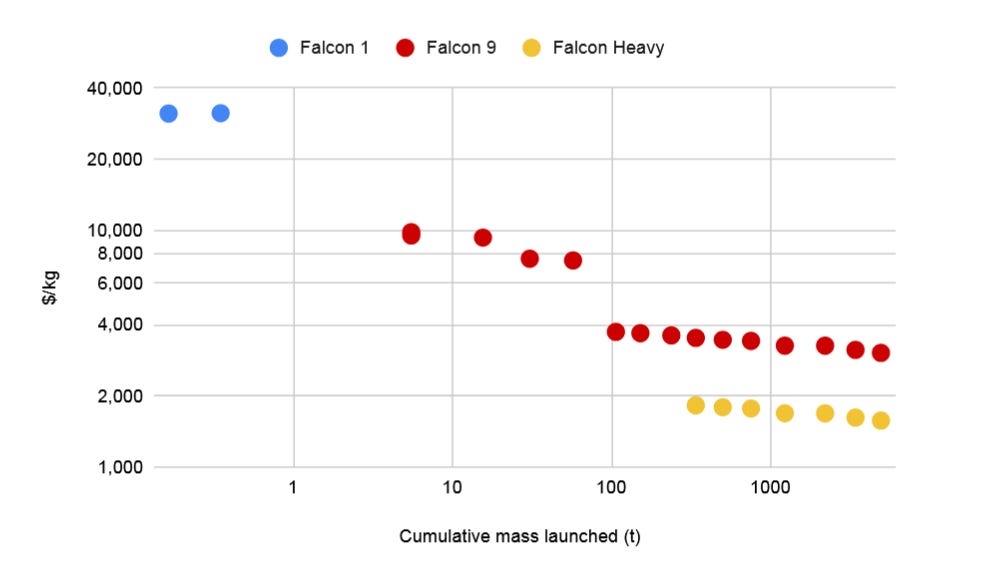

This idea has been floating around for decades. The barrier wasn’t the physics; it was the economics. The cost of launch was astronomical, and it killed every business plan on the desk.

That is no longer true.

Thanks largely to SpaceX and the brutal, consistent logic of its 20% “learning rate” (every doubling of cumulative mass launched, the price per kg drops by ~20%), we are on a collision course with a magic number.

The feasibility analyses for these kinds of space-based systems all circle one threshold: $200/kg to LEO.

At that price, the economics flip.

The Google paper’s analysis is the key. At $200/kg, the annualized cost of launching and operating a space-based system (on a per-kilowatt/year basis) becomes roughly comparable to the cost of just the energy for an equivalent terrestrial data center.

Read that again.

We’re approaching a crossover point where it could be comparable to put a 575kg (Starlink v2 mini) satellite in orbit and run it for five years than it is to just pay the power bill for the equivalent compute on Earth.

That’s the punchline. That’s why this isn’t a sci-fi paper. It’s a business plan. With projections showing $200/kg is plausible by the mid-2030s, the time to start solving the hard-engineering problems is now.

The road ahead

The initial analysis is clear: this isn’t blocked by physics or economics. But it’s still a massive engineering lift. The team still has to solve thermal management (how do you cool a power-dense TPU in a vacuum?), high-bandwidth ground communication, and on-orbit reliability (you can’t just send a tech to swap a failed TPU).

The next step is to get hardware into space. A “learning mission” in partnership with Planet is scheduled to launch two prototype satellites by early 2027.

Project Suncatcher is a bet that the future of compute is constrained by terrestrial resources—land, water, and, most of all, energy. It’s the ultimate act of “moving the compute to the data.”

Except here, the “data” is the raw, 24/7, $3.86 times 10^26 watt-output of the Sun.

Love this perspective on energy becoming the ultimate constraint for AI, it's such a brilliant way to frame the problem beyond just software. It makes me wonder about the practicallities of orbital data centers for real-time applications; your ability to connect the physics to the future of AI is realy insightful.

Love the frame of moving compute to the energy source instead of the other way around. The formation flying to get Tbps optical links is clever, though thermal in vacuum and latncy back to Earth feel like the next big blockers. If launch costs really brush $200 per kg, the econmics start to rhyme with a power bill, which is wild. Biggest open qs to me are repairability and yield in orbit, so lots of redundancy and fault tolerant sw will matter more than shiny TPUs.